Scaling Efficient Masked Image Modeling on Large Remote Sensing Dataset

作者:Fengxiang Wang, Hongzhen Wang, Di Wang, Zonghao Guo, Zhenyu Zhong, Long Lan, Jing Zhang, Zhiyuan Liu, Maosong Sun

论文链接:https://arxiv.org/abs/2406.11933

论文代码:https://github.com/Fengxiang23/SelectiveMAE

发表时间:2024.06.17

摘要:

Masked Image Modeling (MIM) has become an essential method for building foundational visual models in remote sensing (RS). However, the limitations in size and diversity of existing RS datasets restrict the ability of MIM methods to learn generalizable representations. Additionally, conventional MIM techniques, which require reconstructing all tokens, introduce unnecessary computational overhead. To address these issues, we present a new pre-training pipeline for RS models, featuring the creation of a large-scale RS dataset and an efficient MIM approach. We curated a high-quality dataset named OpticalRS-13M by collecting publicly available RS datasets and processing them through exclusion, slicing, and deduplication. OpticalRS-13M comprises 13 million optical images covering various RS tasks, such as object detection and pixel segmentation. To enhance efficiency, we propose SelectiveMAE, a pre-training method that dynamically encodes and reconstructs semantically rich patch tokens, thereby reducing the inefficiencies of traditional MIM models caused by redundant background pixels in RS images. Extensive experiments demonstrate that OpticalRS-13M significantly improves classification, detection, and segmentation performance, while SelectiveMAE increases training efficiency over 2 times. This highlights the effectiveness and scalability of our pipeline in developing RS foundational models.

方法

预训练:SelectiveMAE

问题

-

遥感冗余较大,是否有必要重建所有掩码 Patch?

-

输入到 MAE 编码器的未掩码 Patch 是否可以进一步压缩以提高预训练速度?

总体框架

部分重建

问题1:对于遥感图像,如果随机采样 Patch 并去除大部分进行重建,重建的 Patch 可能在语义上并不丰富。

-

假设输入图像为 x \in R^{H \times W \times C},将其划分为不重叠的 Patches:x^p \in R^{N \times (p^2 C)},其中 N = \frac{H \times W}{p^2}。

-

MAE 随机选取 m \in [0, 1] (m=85 \%) 的 Patch 进行掩码并通过编码-解码器结构重建这些掩码Patch。

-

本文引入重建比例 r \in [0, m] (r=25 \%),通过计算掩码前 Patch 的 HOG 特征,并选择前 r 的 Patch 进行掩码重建,而不使用全部的掩码 Patch,具体公式如下:

渐进式语义 Token 选择

问题2:在训练过程中常出现梯度爆炸或损失发散的问题。

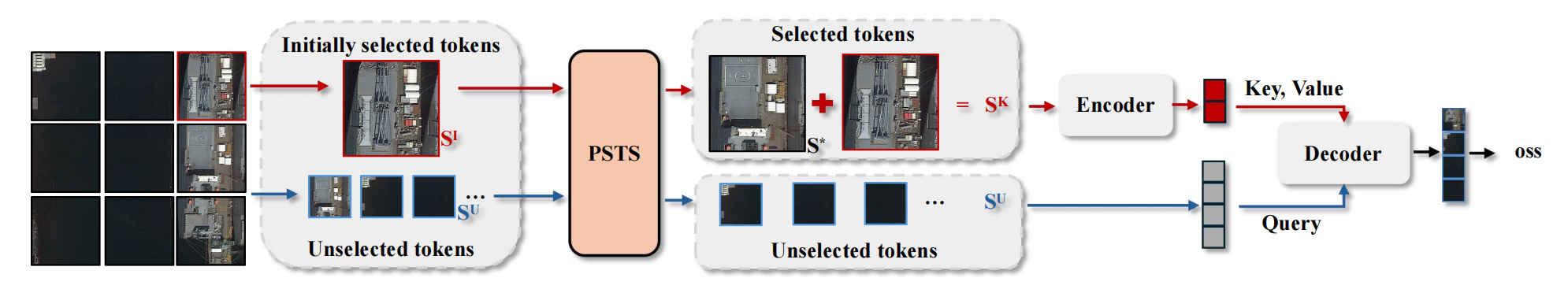

受遵循从易到难学习原则的课程学习 [7, 26, 78] 的启发,本文引入了用于 Patch 选择的渐进式语义 Token 选择(PSTS)模块。在本模块中,首先选择有限数量的 Patch,然后在训练阶段中根据它们选择其他 Patch,从易于学习、语义相似的 Patch 动态过渡到更具挑战性的互补 Patch。

-

首先,使用 HOG 选择策略,以 s \in [0, \frac{1-m}{2} ] 的比例从 S^N = \{ x^p_i \}^N_{i=1} 选出初始 Patch。然后,逐步增加 Token 的数量,以指导模型学习根据挑战性的样本,并保证最终的掩码率。选择的初始 Token 定义如下:

-

然后,根据 S^I,从 S^U中选择 Token,其余弦距离表示如下:

-

根据每个训练阶段 \zeta 的选择标准 ,定义 S^U中 Token 与初始标记集 S^K 之间的距离如下:

-

从 S^U 中采样 \lfloor N \times (1-m-s) \rfloor 个 Token,与 S^I 一起,组成 S^K,表示如下:

-

算法 1 渐进式语义 Token 选择

0